As a Solutions Architect, my area of interest has always been Automation and how it can help developers be more effective and make their lives easier. Many developers are embracing Kubernetes as an environment for their applications. However, setting up a vanilla Kubernetes cluster in the cloud might be quite challenging for a developer who is not keen on doing it the “hard way”. Local setup, with various tools, is easier but takes resources from a developer’s computer. Wouldn’t it be great if we had a simple way to spin up and tear down a Kubernetes cluster in the cloud in a matter of minutes and immediately use it? Now, with Amazon EKS Auto Mode, it is possible! Let’s find out how.

Overview of a solution

Amazon Elastic Kubernetes Service (EKS) is a fully managed Kubernetes service that enables you to run Kubernetes seamlessly in both AWS Cloud and on-premises data centers. There are several methods of deploying an EKS cluster. You can find some of them in the documentation or in the launch blog. Today I will use eksctl, a tool which simplifies the deployment of an EKS cluster.

Prerequisites

Before you start, you need to ensure that you have:

- Install AWS CLI

- Install eksctl to create a EKS cluster

- Install kubectl to get access to the EKS cluster

- (Optional) Install eks-node-viewer to visualize EKS cluster nodes

Walkthrough

Creating an EKS cluster

Configure AWS credentials.

Create an EKS Auto Mode cluster with minimal required parameters:

1 | eksctl create cluster --name=easy-cluster --enable-auto-mode |

- Wait up to 15 minutes, and here it is - a brand new Kubernetes cluster. Task accomplished, we can

go homestart using the cluster. Could it be easier?

But is it fully functional? Let’s deploy a sample web application.

Deploying a sample application.

- Get access to the EKS cluster:

1 | aws eks --region $AWS_REGION update-kubeconfig --name easy-cluster |

- Get the EKS cluster nodes:

1 | kubectl get nodes |

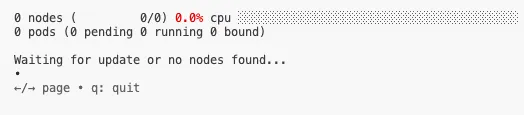

- You should receive “No resources found”. So, we not only got an easy setup, but also don’t waste resources on EC2 nodes until we deploy a workload.

- Deploy a sample load balancer workload to the EKS Auto Mode cluster using this instructions or the code snippet below:

1 | cat <<EOF | kubectl create -f - |

- Check that all resources have deployed properly. It might take 3-5 minutes to deploy the Application Load Balancer (ALB):

1 | kubectl get nodes -o wide |

1 | URL=http://$(kubectl get ingress -n game-2048 -o jsonpath='{.items[0].status.loadBalancer.ingress[0].hostname}') |

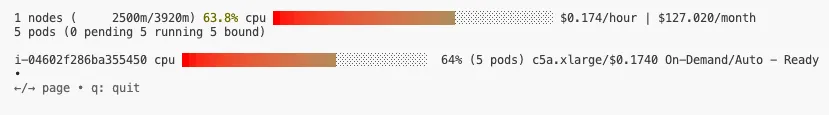

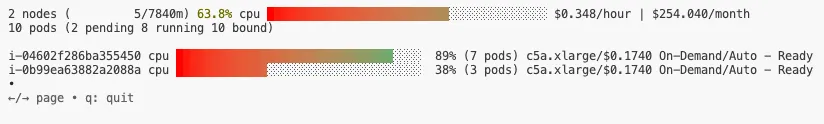

- Now you have an up and running EKS cluster with your workloads. You can also scale the number of replicas in the deployment to 10 and back to zero to see how EKS cluster nodes are created and destroyed.

1 | kubectl scale deployment deployment-2048 -n game-2048 --replicas=10 |

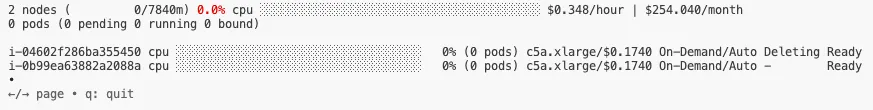

1 | kubectl scale deployment deployment-2048 -n game-2048 --replicas=0 |

Cleaning up

- Delete deployed resources:

1 | kubectl delete namespace game-2048 |

- Delete the EKS cluster:

1 | eksctl delete cluster --name=easy-cluster |

Make sure that Cloudformation Stack for the EKS custer deleted completely.

Conclusion

In this blog post, I demonstrated how you can deploy an Amazon EKS cluster in Auto Mode using eksctl. This setup not only gives you the ability to start fast, but also delivers a ready-to-use EKS cluster.

Developers can create an EKS cluster when they need it, with minimal effort, and delete it when it’s no longer needed, reducing expenses.

Let’s go build!